Let me ask you a question: Have you worked with developers to integrate your Lambda function into your application, or are you just using Lambda for some backup automatons 😀 ?

Have you encountered issues such as buffering and throttling, request failures, challenges in handling retries, and difficulties in operating services independently? More importantly, have you faced challenges in scaling your system?

I’m not going to talk about all the serverless today, But lets understand incorporating AWS Lambda and SQS alone can offer enormous benefits.

Generally speaking, we have two types of system calls: Synchronous Calls and Asynchronous Calls. To explain these two, let’s deploy an application.

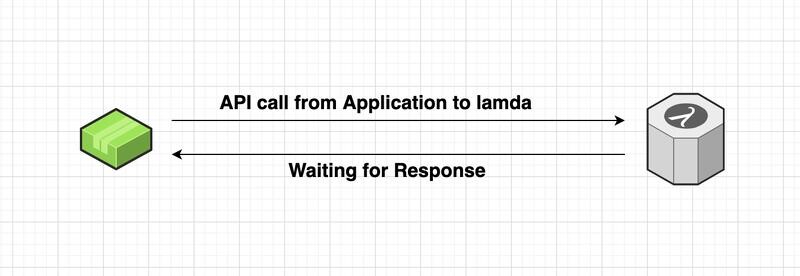

In synchronous when you send a request and wait for the response, it’s straightforward and easy to understand. It’s like dealing with one thing at a time, making it predictable and simpler to find and fix issues.

When you call the Lambda API to accomplish tasks from your web application, it’s like sending a request and waiting for the response.

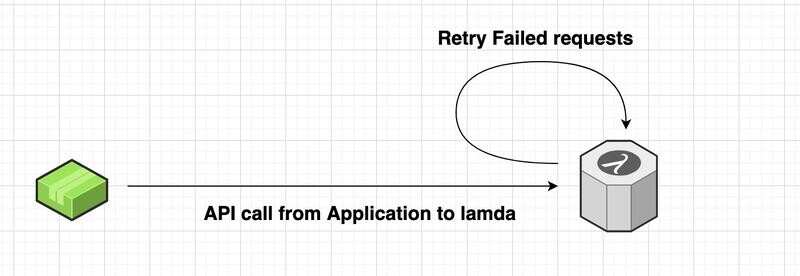

Any errors from the response you need to handle them explicitly and if you want to retry logic again its on your head. if i were you I’ll blame end user for sending a bad request 😂 .

Now, for asynchronous calls, it’s a bit like sending mail. You send it, and it will be delivered, but you don’t sit around waiting for an immediate response. If a request doesn’t work the first time, Lambda gives us a chance to try again. But here’s the catch: your process needs to be idempotent, meaning it gives the same result even if you run it multiple times. And if, after multiple tries, the request still fails, Lambda has our back. It can help by sending those requests to a dead-letter queue, and we can reprocess them using additional Lambda functions.

- Users don’t have to wait for the web response.

- We can boost performance by letting many tasks happen at the same time without waiting for one to finish before starting the next.

- This opens the door to scaling the system, handling more tasks all at once.

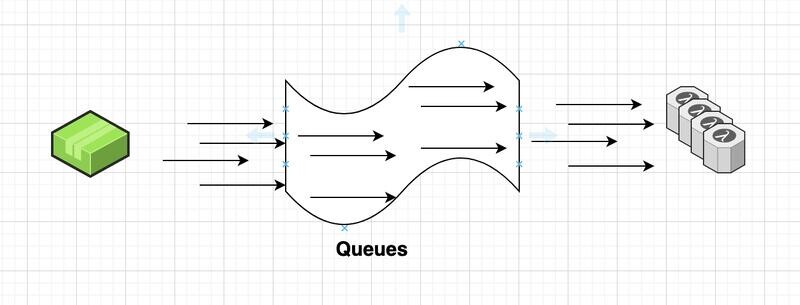

Imagine many requests coming in, and Lambda tries to keep them all in the queue. But, if your function doesn’t have enough capacity to process them all, some might get removed from the queue without reaching the function. That’s where we need something to manage the longer queue.

Asynchronous invocation with SQS

In a decoupled application, when there’s a heavy load, you have to scale your computing power to handle the requests. Applications like streaming or those dealing with live data need highly scalable architectures. Scaling can be done based on the queue. Exception handling, retries, and a dead letter queue make the process smoother and more manageable.

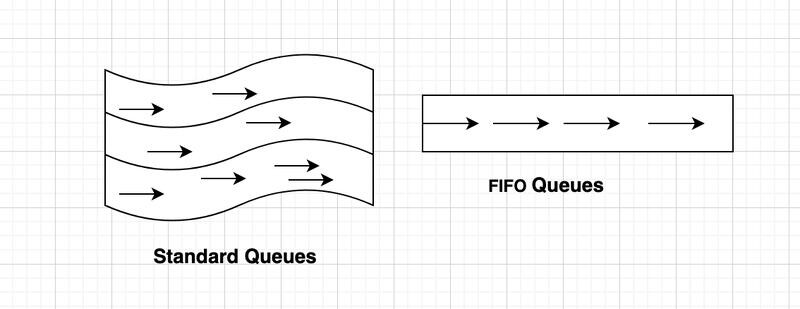

SQS manages queues in two ways: Standard or FIFO Queues.

- Standard Queues: Think of them like a multi-lane road. They provide at least once delivery, but the order is not guaranteed. There’s no limit on the number of messages per second.

- FIFO Queues: Picture it as a single-lane road. FIFO queues respect the order, ensuring exactly once delivery. They have a capacity of 300 messages per second, with a batch limit of 3,000 messages per second. In contrast, Standard queues don’t have such limits.

Handling modern applications ideally involves combining synchronous and asynchronous processing to provide users with immediate confirmations for their requests while efficiently managing time-consuming tasks, such as order fulfilment, in the background. This approach enhances user experience by offering prompt responses and ensures system efficiency by offloading resource-intensive operations to asynchronous processes. This combination helps strike a balance between responsiveness and scalability, contributing to a seamless and effective application architecture.